MariaDB

For emergencies, check the subpage about MariaDB troubleshooting.

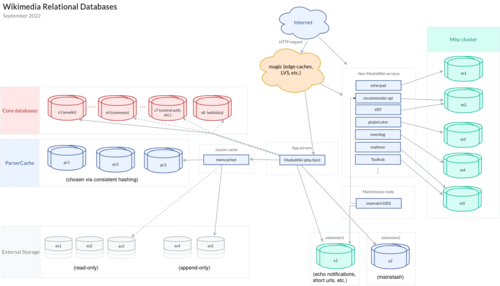

MariaDB is the main database management system used to run the Wikimedia sites.

For a general overview, check the MySQL@Wikipedia (2015) slides (MariaDB is a drop-in replacement for MySQL, which we used to use).

Sections and shards

For a full list, look at db.php.

Check Orchestrator (NDA required) for the db tree and the replication status.

Core MediaWiki databases

- s1: English Wikipedia

- s2: 17 large wikis, including Chinese, Italian, Dutch, Polish, and Portuguese Wikipedias and English Wiktionary

- s3: Most small wikis (~900)

- s4: Commons, Testcommons

- s5: German Wikipedia, some other large wikis, and most new wikis since mid-2020 (T259438)

- s6: French, Japanese, and Russian Wikipedias, and Wikitech

- s7: CentralAuth, Meta, 10 large Wikipedias, and French Wiktionary

- s8: Wikidata

External storage

The external storage servers host the full text of all page revisions in a compressed format.

- es1: read-only cluster

- es2: read-only cluster

- es3: read-only cluster

- es4: read-only cluster

- es5: read-only cluster

- es6: read/write cluster

- es7: read/write cluster

Parsercaches

pc* hosts store the largest, disk-based part of the parser cache mediawiki service (for example, temporary parsed HTML output sent to users). While the service is very important for performance optimization, individually, and in general, rows can be lost, as they will be automatically inserted back when re-parsed, on next cache miss.

Because data loss is not a concern, but read only could cause application errors, unlike most production dbs, parsercaches are started by default in read-write mode, so they are writable by the application at all times.

Its topology follows the same structure as core hosts (s*)- normally, they are written in the primary datacenter and replicated to the secondary, but there has been proposals to make them work like x2 (local and independent on each datacenter) in the future.

- pc1-pc3: parser caches

Extension storage

x1

The x1 cluster is used by MediaWiki at WMF for databases that are "global" or "cross-wiki" in nature, and are typically associated with a MediaWiki extension. Apart from being used across wikis, it is otherwise configured, replicated, and queries in the same way as "core" databases.

Tables that don't need to join with core tables can (and should) live in x1, there is a wiki db for per-wiki tables.

| Feature | Database | Phabricator project | Steward |

|---|---|---|---|

| BounceHandler | wikishared.bounce_*

|

||

| CampaignEvents | wikishared.campaign_events, wikishared.ce_*, [wiki].campaign_events, [wiki].ce_*

|

Campaigns Product Team | |

| Cognate | cognate_wiktionary.*

|

||

| ContentTranslation | wikishared.cx_*

|

Language and Product Localization | |

| Echo | wikishared.echo_*, and

|

Growth Team | |

| Flow | flowdb.*

|

Growth Team | |

| GrowthExperiments | [wiki].growthexperiments_*

|

Growth Team | |

| ReadingLists | wikishared.reading_list_*

|

||

| UrlShortener | wikishared.urlshortcodes

|

||

| WikimediaCampaignEvents | wikishared.wikimedia_campaign_events_grant, [wiki].wikimedia_campaign_events_grant

|

Campaigns Product Team | |

| WikimediaEditorTasks | wikishared.wikimedia_editor_tasks_*

|

x2

- Main article: mw:MainStash

This cluster is reserved for use by the MainStash. It is read-write in all data centers and replicated in both directions.

This cluster can be visualized graphically at: https://orchestrator.wikimedia.org/web/cluster/alias/x2 (NDA needed)

The replication topology is master-master, meaning that eqiad master and codfw master replicate from each other and they are both writable. Those clusters do not use the replicas for reads, everything goes to the master. The replicas are there for redundancy purposes. In case of replica failure there is no need to depool anything as they aren't in use.

Like x1 and parsercache, x2 is shared by all wikis. This means that to depool the x2 master in a secondary DC, one should depool MW from that DC in general (T315995#8258320).

These hosts use STATEMENT based replication and they have Innodb-flush-log-at-trx-commit = 0 for better performance as data consistency isn't critical.

Miscellaneous

- Main article: MariaDB/misc

The miscellaneous clusters host databases for various auxiliary services.

- m1: Internal SRE services and Etherpad.

- m2: VRTS, debmonitor and others.

- m3: Phabricator, and others.

- m5: Mailman, CXServer, WMCS services, and others.

- db_inventory: Orchestrator, and Zarcillo.

Database creation template

If you need to request a new database please create a task with the DBA tag and filling out the following fields if you know them (or can estimate them)

- QPS:

- Size: (An estimation is fine)

- DB Name:

- User:

- Accessed from server (s):

- Backup Policy: Needed? Frequency?

- Grants needed:

Cloud Services (WMCS) Wiki replicas

- clouddb1013-1020: Wiki replicas (multi-instance)

Data Platform

- an-redacteddb1001: Analytics multi-instance dedicated (sanitized) wikireplica. Used the first days of the month.

- an-mariadb100[1-2]: Analytics Meta instance, serving Hive, Superset, DataHub, Druid.

- matomo1003: Local instance serving matomo

- dbstore100[7-9:] Multi-instance analytics hosts

- db1208: Dedicated backup host

Replicas

The master database is the primary server for a given shard. If the master database server fails, then a replica can be promoted to be a new master. See Master switch for more information.

A database replica (or slave database) is another MariaDB or MySQL instance that replicates data from the master database. Most often, we add replicas in production for the purpose of load-balancing read queries, and for backup/failover purposes.

Cloud Services Wiki Replicas

Copies of the core databases with private data removed are part of the Data Services hosted by Wikimedia Cloud Services for bot, tool, and analysis use. The redaction is done during an intermediate stop on Sanitarium.

Wiki Replicas run in multiple hosts, with multiple processes, see Portal:Data_Services/Admin/Wiki_Replicas for more details.

To depool a Wiki Replica host, see Portal:Data Services/Admin/Runbooks/Depool wikireplicas.

Analytics MariaDB cluster

The Analytics MariaDB cluster contains full replicas of the core and extension storage databases.

Database backups

- Main article: MariaDB/Backups

In 2017, multiple hardware and scalability issues lead to a focus and complete redesign of the database backup architecture, leading to the current one explained at MariaDB/Backups, with focus on full coverage, validation and testing and full automation recovery.

Rebooting or reimaging a host

The procedure for safely rebooting or reimaging a database host is described at MariaDB/Rebooting_a_host.

Packages

Asher started us using stock upstream packages from mariadb.org with the debs "de-debianized" each time to have fewer hooks, allowing puppet to handle stuff. Simply:

apt install wmf-mariadb10X

Where X is the major version, ie:

MariaDB 10.4: wmf-mariadb104

MariaDB 10.6: wmf-mariadb106

Puppet controls manually the systemctl script and the package uses update-alternatives to update the symlinks in /usr/local/bin. Unlike the upstream versions our packages are fine to install alongside one another.

Installation

See mariadb roles in puppet.

Hardware installation checklist

Once the physical racking and OS installation has been, normally by DCOPs, the following items must be checked before considering the host ready to receive data.

- Amount of memory showing up on the OS matches the expected

- RAID setup

- RAID level (typically 10)

- RAID stripe size (256K)

- BBU in place and policy set to WriteBack

- Amount of disk space available for srv matches the expected

- Number of CPUs matches the expected value

Setting up a fresh server

- To initialize the database:

/opt/wmf-mariadb106/scripts/mysql_install_db --basedir=/opt/wmf-mariadb106/- If this is a multi-instance db server, you'll need to provide

--datadir=/srv/sqldata.<SECTION>as well.

- If this is a multi-instance db server, you'll need to provide

- Start the service:

systemctl start mariadb- For multi-instance, the service is

mariadb@<SECTION>

- For multi-instance, the service is

- Set up defaults:

/opt/wmf-mariadb106/bin/mysql_secure_installation --basedir=/opt/wmf-mariadb106/ -S /run/mysqld/mysqld.sock- For multi-instance, change it to

-S /run/mysqld/mysqld.<SECTION>.sock - Don't set root password, accept defaults for everything else.

- For multi-instance, change it to

Loading Data / provisioning

Setting up a new replica (or repairing a current one) is done through the recovery/provisioning system: MariaDB/Backups

However, one can do still manual hot or cold copies of running or stopped servers through transfer.py script on the cluster management hosts (cumin1002.eqiad.wmnet, cumin2002.codfw.wmnet).

Schema Changes

See Auto schema

First decide if the schema change can be done online. This only works if:

- The table has a PRIMARY KEY or UNIQUE KEY on NOT NULL field(s)

- MediaWiki can ignore the change behind a feature flag

- Table metadata locking has been considered

Offline Schema Changes

Done via Auto schema

Sanitarium and clouddb instances

See more: Sanitarium and clouddb instances

Table Metadata Locking

Both ALTER TABLE need to hold the table metadata lock. They must wait for open transactions to close the table and also block new transactions opening the table. On a busy server and a hot table like page or revision this can easily result in many seconds of delay which is more than enough time for connections to pile up and hit max_connections.

Consider reducing load or depooling the box.

Monitoring

See MariaDB/monitoring.

Replication lag

Production replication lag can be checked on https://orchestrator.wikimedia.org/web/clusters (an NDA is needed to access it).

See MariaDB/troubleshooting#Replication lag and MySQL#Replication lag on how to handle replication lag.

Manipulating the Replication Tree

Orchestrator displays the full replication tree (requires NDA)

Thanks to GTID replication, implemented almost everywhere in production, you can move slaves just by executing:

(Be aware that due to some old gtid_domain_id living in production, the below command needs to be executed carefully, so don't do it unless you know what you are doing)

STOP SLAVE; CHANGE MASTER TO MASTER_HOST='<new master fqdn>'; START SLAVE;

| Manipulating slaves using repl.pl |

|---|

|

This page may be outdated or contain incorrect details. Please update it if you can.

we don't use repl.pl anymore A common task is manipulating slaves within the replication hierarchy. A script exists to help out: operations/software/dbtools/repl.pl. It allows controlling two slaves in a parent/child or sibling/sibling relationship and will do sanity checks plus confirmation prompts. It has nothing to do with masters so don't try to use it for those :-) Child to SiblingMove a slave one layer upward in the hierarchy: ./repl.pl --switch-child-to-sibling --parent=db1007.eqiad.wmnet --child=db1035.eqiad.wmnet Child must be replicating directly from parent. Sibling to ChildMove a slave one layer downward in the hierarchy: ./repl.pl --switch-sibling-to-child --parent=db1007.eqiad.wmnet --child=db1035.eqiad.wmnet Both slaves must be replicating from the same master. Stop Siblings in SyncStop two slaves on the same layer in the hierarchy at a common binlog position: ./repl.pl --stop-siblings-in-sync --host1=db1007.eqiad.wmnet --host2=db1035.eqiad.wmnet Both slaves must be replicating from the same master. |

HAProxy

Main page containing : HAProxy

dbproxy1XXX boxes run HAProxy. Besides making for easier Ops server rotations simply by having a proxy IP in the mix, there are two modes for other stuff: load balancing for slaves and failover for masters. So far, only misc shards masters and the clouddb replicas uses it via dbproxy1XXX.

We also have codfw proxies (dbproxy2XXX) serving misc services.

Failover

See role::mariadb::proxy::master

Load Balancing

See role::mariadb::proxy::slaves

Puppet

The main module for DBAs on the operations/puppet tree is "mariadb", which is in its own repo operations/puppet/mariadb. Remember to update the subrepo when committing changes to the mariadb module, otherwise it will not be caught by palladium, strontium, CI, etc.

Despite all the previous, there are mariadb-related files on the main repo- shared among the classes. Those are the puppet/templates/mariadb/ files, where the main configuration and grants lie.

There is a forth place where you will find mariadb-related files, and that is the private repository, but I suppose that requires no explanation (passwords, etc.).

Other DBA related repos are:

- operations/software/dbtools: for scripts used for maintenance

- operations/software/redactatron: labs filtering

- operations/software/wmfmariadbpy

- operations/software/schema-changes

Long running queries

- Main article: /troubleshooting#Overload_due_to_long_running_queries

You can identify long running queries on logstash. The query killer should stop read queries running longer than 60 seconds, but may not function properly under high load. db-kill can be used in emergencies to kill any query running for longer than 10 seconds.

Storage engines

We use InnoDB for storage engine.

In 2015, TokuDB was trialled but was found to be buggy.

Importing table spaces from other hosts

Transportable tablespaces

Since MySQL 5.6 it is posible to use advantage of transportable table spaces to move around .ibd files from one server to another (if file per table is enabled.

This feature provides a fast way of copying data from one host to another over the network using compression and nc for instance.

To learn more about to copy .ibd files from one host to another, please check out MariaDB/ImportTableSpace.

Account handling

root@localhost account no longer uses passwords for authentication (task T150446), but the UNIX socket authentication plugin. This allows us to stop using passwords (that can be copied, exposed and compromised), and allows the root system user to login from localhost by running:

sudo mysql

or

sudo mysql --skip-ssl

Because mariadb uses the uid of the linux user, there is no need to write passwords to the filesystem anymore, and in the event of a host compromise, only localhost is affected, and not any other host sharing the same password. This could later be extended to other system accounts that only connect from localhost, such as icinga or prometheus. Note that if a superuser is compromised on a host, not having a password is not a further barrier, as root has the ability to manage, read and modify at will MySQL files.

How unix_socket authentication works

Since MariaDB 10.4.3, the plugin is enabled by default

To use it, we have to alter the user we want to authenticate:

GRANT USAGE ON *.* TO root@localhost IDENTIFIED VIA unix_socket;

This will delete the user password, but will indicate it does not uses the mysql native password authentication, but unix socket.

MariaDB [(none)]> SELECT user,host,password,plugin FROM mysql.user WHERE user='root'; +------+--------------+-------------------------------------------+-------------+ | user | host | password | plugin | +------+--------------+-------------------------------------------+-------------+ | root | localhost | | unix_socket |

By the way, note that the authentication name is unix_socket, but the plugin loaded is auth_socket.so. Do not use auth_socket on the GRANT/CREATE USER statements.

Also, if for some reason, you revert this change, make sure you put a password back:

GRANT USAGE ON *.* TO root@localhost IDENTIFIED BY '<super_secure_password>';

Dumping tables with mydumper

What is mydumper?

From the documentation:

* Parallelism (hence, speed) and performance (avoids expensive character set conversion routines, efficient code overall) * Easier to manage output (separate files for tables, dump metadata, etc, easy to view/parse data) * Consistency - maintains snapshot across all threads, provides accurate master and slave log positions, etc * Manageability - supports PCRE for specifying database and tables inclusions and exclusions

Why do we use it

Its speed and parallelism makes it perfect to save time and load data a lot faster.

It is easier to recover single tables or rows.

It compress nicely

From the tests we have seen

- Taking a full dump of s3 takes 1:15h - 88GB

- Taking a full dump of s1 takes 53 minutes - 93GB

- Taking a full dump of s5 takes 1:20h - 93G

Quick cheatsheet

Dump data

Right now and in order to start it - as it doesn't accept any flag as no to read the default file, the following section needs to be commented out on the host my.cnf

# ssl-ca=/etc/ssl/certs/Puppet_Internal_CA.pem # ssl-cert=/etc/mysql/ssl/cert.pem # ssl-key=/etc/mysql/ssl/server.key # ssl-verify-server-cert

Once that is done, a typical way to dump a database:

mydumper -c -h localhost -t 8 -u root -r 100000000 -B wikidatawiki -S /tmp/mysql.sock -o output_directory_name

-c To compress the data files

-h The host

-t How many parallel threads you want dumping at the same time

-u The user

-r Try to split tables into chunks of this many rows

-B Database you want to dump

-S Specify the socket to connect to

"-o" Specify the output directory where you want to save the files to. If not specified, it will generate a directory under your current path called: export-2017xxxxx-xxxxx with all the files.

Load data

Please check the dump data section to make sure you are able to connect (comment out the SSL options).

By default it disables the logging into the binlog

Once that is done, typically you want to run:

myloader -h localhost -t 8 -u root -S /tmp/mysql.sock -d export-xxxxx

-h The host

-t How many parallel threads you want loading the data at the same time

-u The user

-S Specify the socket to connect to

-d The directory you want to import the files from

Production section failover checklist

- It has been suggested that this page should be merged with MariaDB/troubleshooting#Depooling_a_master_(a.k.a._promoting_a_new_slave_to_master)

Switch master

Use https://switchmaster.toolforge.org/schedule to get the ticket and the gerrit patches, if that doesn't work, there is a script: db-switchover that automates most of these steps.

Restarting switch master tool

If a change is made to the templates or anything regarding the tool, please update its code:

ssh login.toolforge.org become switchmaster cd www/python/src/ git pull webservice --backend=kubernetes python3.9 restart

Old switchover steps (not used anymore)

In order to generate the task you can use the script switchover-tmpl.py that lives on operations/software/dbtools/ and will generate the task content. As an example, generate a task for the s1 switchover where db1163 will be the new master.

python3 switchover-tmpl.py s1 db1163

If you want to generate the task manually, use the following checklist:

NEW master: OLD master:

- Check configuration differences between new and old master

pt-config-diff h=NEW.eqiad.wmnet,F=/root/.my.cnf h=OLD.eqiad.wmnet,F=/root/.my.cnf

- Silence alerts on all hosts

- Set NEW master with weight 0 and depool it from its section

dbctl instance NEW edit dbctl config commit -m "Set NEW with weight 0 TXXXXXX"

- Topology changes, connect everything to NEW

db-switchover --timeout=15 --only-slave-move OLD.eqiad.wmnet NEW.eqiad.wmnet

- Disable puppet @NEW and @OLD

puppet agent --disable "switchover to NEW"

- Merge gerrit puppet change to promote NEW to master (Example): https://gerrit.wikimedia.org/r/#/c/operations/puppet/+/538747/

- Start the failover

!log Starting sX failover from OLD to NEW - TXXXXXX dbctl --scope eqiad section sX ro "Maintenance till 05:30AM UTC TXXXXXX" && dbctl config commit -m "Set sX as read-only for maintenance TXXXXXX"

- Check that sX is indeed on read-only

- run switchover script from one of the cluster management hosts (

cumin1002.eqiad.wmnet, cumin2002.codfw.wmnet):

root@cumin1001:~/wmfmariadbpy/wmfmariadbpy# db-switchover --skip-slave-move OLD NEW ; echo OLD; mysql.py -hOLD -e "show slave status\G" ; echo NEW ; mysql.py -hNEW -e "show slave status\G"

- Promote NEW to master and remove read-only, leave OLD (old master) with weight 0 for now

dbctl --scope eqiad section sX set-master NEW && dbctl --scope eqiad section sX rw && dbctl config commit -m "Promote NEW to sX master and remove read-only from sX TXXXXXX"

- Restart puppet on old and new masters (for heartbeat): OLD and NEW

run-puppet-agent -e "switchover to NEW"

(Equivalent manual steps)

- Set old master in read only: mysql.py -hOLD -e "set global read_only=ON;"

- Disable heartbeat @OLD

killall perl

- Confirm new master has catched up

host=OLD; echo "== $host =="; mysql.py -h $host -e "show master status\G show slave status\G" | grep ' File:\| Position:\|Relay_Master_Log_File:\|Exec\_Master\_Log\_Pos:'; host=NEW; echo "== $host =="; mysql.py -h $host -e "select @@hostname; show master status\G show slave status\G" | grep ' File:\| Position:\|Relay_Master_Log_File:\|Exec\_Master\_Log\_Pos:'

- Stop slave on new master

mysql.py -h NEW -e "STOP SLAVE;"

- Confirm pt-hearbeat has been started on NEW

pgrep perl / mysql.py -h NEW -e "SELECT * FROM heartbeat.heartbeat ORDER BY ts DESC LIMIT 1\G"

- Remove read-only from NEW: mysql.py -hNEW -e "set global read_only=OFF;"

- RESET SLAVE ALL on new master

mysql.py -h NEW.eqiad.wmnet -e "reset slave all;"

- Change old master to replicate from new master

mysql.py -hOLD: change master to master_host='NEW.eqiad.wmnet', master_user='repl', master_password=, master_port=3306, master_log_file=, master_log_pos=, master_ssl=1;

if all looks good: start slave; Enable GTID on old master (OLD)

(End of equivalent manual steps)

Clean up tasks:

- change events for query killer:

events_coredb_master.sql on the new master NEW events_coredb_slave.sql on the new slave OLD

- Update DNS (example): https://gerrit.wikimedia.org/r/#/c/operations/dns/+/538748/

- Clean up lag in orchestrator. Typically you need to go to the new master's heartbeat database and clean up the old master server_id from that table (with replication enabled):

select * from heartbeat; -- Get the server_id from the old master delete from heartbeat where server_id=171970778; -- example id for db1183

- Give weight to OLD if needed

- If the old master will be decommissioned or will go under maintenance: depool it from dbctl: dbctl instance HOSTNAME depool

- Change candidate master note (generally remove it from NEW and add it to OLD)

dbctl instance OLD set-candidate-master --section sX true dbctl instance NEW set-candidate-master --section sX false

- Update/resolve phabricator ticket about failover

External store section failover checklist

External store failovers differ a bit from current sX ones, as we need to disable writes on the given section first. RO external stores (as of today, es1, es2 and es3) do not need this, as those hosts are stand alone and only read only.

As of today, es4 and es5 are RW, so they have a normal replication topology.

Disabling writes on a section can be done safely, so the failover needs no rush. Even if it can be done hours ahead, disabling writes should be done just a few minutes the actual failover time, to avoid un-balancing of both sections.

When one section has writes disabled, reads are still happening without any issues.

If the idea is to depool the old master, make sure to leave the new master with a bit of weight, to avoid having just one slave serving traffic. Usually leaving the master with weight 50 and the slave with weight 100 is enough.

Check list for failing over an es section with es1023 and es1024 as examples:

NEW master: es1024 OLD master: es1023 # Check configuration differences between new and old master $ pt-config-diff h=es1023.eqiad.wmnet,F=/root/.my.cnf h=es1024.eqiad.wmnet,F=/root/.my.cnf

# Silence alerts on all hosts # Set NEW master with weight 50 dbctl instance es1024 edit dbctl config commit -m "Set es1024 with weight 50 T255755" # Topology changes, connect everything to es1024 db-switchover --timeout=15 --only-slave-move es1023.eqiad.wmnet es1024.eqiad.wmnet # Disable puppet @es1023 and @es1024 disable-puppet "switchover to es1024" # Merge gerrit puppet change to promote es1024 es1024: https://gerrit.wikimedia.org/r/#/c/operations/puppet/+/607236/ # Start the failover !log "Starting es failover from es1023 to es1024 - https://phabricator.wikimedia.org/T255755" Disable es5 writes https://gerrit.wikimedia.org/r/#/c/operations/mediawiki-config/+/606663/

# Check that es5 is indeed on read-only

# run switchover script from one of the cluster management hosts (cumin1002.eqiad.wmnet, cumin2002.codfw.wmnet):

root@cumin1001:~/wmfmariadbpy/wmfmariadbpy# db-switchover --skip-slave-move es1023 es1024 ; echo es1023; mysql.py -hes1023 -e "show slave status\G" ; echo es1024 ; mysql.py -hes1024 -e "show slave status\G"

# Promote es1024 to master and remove read-only, leave es1023 (old master) with weight 0

dbctl --scope eqiad section es5 set-master es1024 && dbctl config commit -m "Promote es1024 to es5 master T255755"

# Restart puppet on old and new masters (for heartbeat): es1023 and es1024

run-puppet-agent -e "switchover to es1024"

# Enable es5 on MW (REVERT PATCH https://gerrit.wikimedia.org/r/#/c/operations/mediawiki-config/+/606663/)

Clean up tasks:

# change events for query killer:

events_coredb_master.sql on the new master es1024

events_coredb_slave.sql on the new slave es1023

# Update DNS: https://gerrit.wikimedia.org/r/609899

# Clean up lag in orchestrator. Typically you need to go to the new master's heartbeat database and clean up the old master server_id from that table (with replication enabled):

select * from heartbeat; -- Get the server_id from the old master

delete from heartbeat where server_id=171970778; -- example id for db1183

# Update/resolve phabricator ticket about failover

Misc section failover checklist (example with m2)

OLD MASTER: db1065

NEW MASTER: db1132

- Check configuration differences between new and old master

$ pt-config-diff h=db1068.eqiad.wmnet,F=/root/.my.cnf h=db1081.eqiad.wmnet,F=/root/.my.cnf

- Silence alerts on all hosts

- Topology changes: move everything under db1132

db-switchover --timeout=1--only-slave-move db1065.eqiad.wmnet db1132.eqiad.wmnet

- Disable puppet @db1065, puppet @db1132

puppet agent --disable "switchover to db1132"

- Merge gerrit: https://gerrit.wikimedia.org/r/#/c/operations/puppet/+/519975/

- Run puppet on dbproxy1002 and dbproxy1007 and check the config

run-puppet-agent && cat /etc/haproxy/conf.d/db-master.cfg

- Start the failover

!log Failover m2 from db1065 to db1132 - T226952 root@cumin1001:~/wmfmariadbpy/wmfmariadbpy# db-switchover --skip-slave-move db1065 db1132

- Reload haproxies

dbproxy1002: systemctl reload haproxy && echo "show stat" | socat /run/haproxy/haproxy.sock stdio dbproxy1007: systemctl reload haproxy && echo "show stat" | socat /run/haproxy/haproxy.sock stdio

- kill connections on the old master (db1065)

pt-kill --print --kill --victims all --match-all F=/dev/null,S=/run/mysqld/mysql.sock

- Restart puppet on old and new masters (for heartbeat):db1065 and db1132

run-puppet-agent -f

- Check services affected (otrs,debmonitor) DEBMONITOR and OTRS looking good

- Clean up lag in orchestrator. Typically you need to go to the new master's heartbeat database and clean up the old master server_id from that table (with replication enabled):

select * from heartbeat; -- Get the server_id from the old master delete from heartbeat where server_id=171970778; -- example id for db1183

- change events for query killer:

events_coredb_master.sql on the new master db1132 events_coredb_slave.sql on the new slave db1065

- Update/resolve phabricator ticket about failover https://phabricator.wikimedia.org/T226952

- Create decommissioning ticket for db1065

Special section: x1 master switchover

x1 is a "special" section which cannot be put on read-only on mediawiki, so it needs to rely on the db-switchover script which puts MySQL on read-only. When failing over, please tag the following Teams and people on the phabricator task so they can have a heads up as they are x1 stakeholders and need to know that x1 will have writes blocked for around one minute.

- Subscribers: Tgr, JoeWalsh, Dbrant, Ladsgroup, Addshore, Legoktm

- Tags: Cognate, Growth-Team, Language-Team, User-notice, UrlShortener, StructuredDiscussions, MediaWiki-extensions-BounceHandler, ContentTranslation, Reading List Service, WikimediaEditorTasks.

Example task: phab:T226358

Decommissioning a mariadb host server checklist

See MariaDB/Decommissioning_a_DB_Host

Depool a broken or lagged replica

From one of the cluster management hosts (cumin1002.eqiad.wmnet, cumin2002.codfw.wmnet):

dbctl instance dbXXXX depool dbctl config commit -m "dbXXXX depooled"

Create a task with the DBA tag so DBAs can follow up and checkout what happened, a proper fix etc

If the broken host is a parsercache, follow this procedure.

Testing servers

As of today (Dec 2022) there are two testing hosts on a shard called test-s4:

- DB master (writable): db1124.eqiad.wmnet 256GB RAM + RAID10

- DB slave: (read-only): db1125.eqiad.wmnet 256GB RAM + RAID10

Whilst these hosts are normally up, it is not guaranteed they will always be available or up.

These hosts have static data, and are not updated with production data. More tables or wikis can be requested if needed by filing a ticket to DBAs). It is not guaranteed that they'll be included, but they can be studied and some expectations about ETA and if it is possible to have that data will be given on the ticket.

Access to these hosts is granted individually to those who already have an NDA and cluster access if really needed. Please file a ticket and tag DBA to let us know why you need access so we can start a discussion about it.

People with access

- Data Persistence Members

How to enable a new external storage (es) section

The process of enabling a new external storage section can be complex and require different steps.

Pre steps

- Check all the involved servers have replication working and enabled (with SSL). Check replication lag is 0

- Check all the involved servers have weight (if not done, check the section below)

- Check pt-heartbeat is running

- Check all the involved servers have notifications enabled (and Icinga looks clean)

- Check the future active master has read_only set to OFF

- Check the future servers have all the wikis and blobs_clusterXX tables created. This is an example ticket T245720

- Check wikiadmin wikiuser have grants.

Setting up the servers with dbctl

external storage sections normally have the following weights: master: 0 slave1: 100 slave2: 100

In order to be able to do so, use dbctl to generate those configs.

dbctl instance esXXXX edit

And leave it like this for the master

# Editing object eqiad/esXXXX

host_ip: 10.XX.XX.XX

note:

port: 3306

sections:

esX: {percentage: 100, pooled: true, weight: 0}

And like this for a slave

# Editing object eqiad/esXXXX

host_ip: 10.XX.XX.XX

note:

port: 3306

sections:

esX: {percentage: 100, pooled: true, weight: 100}

Commit the change

dbtcl config commit -m "Set weights for the new es hosts - TXXXXXX"

Mediawiki patches and deployment

In order to be able to set the new external storage there are two steps needed from mediawiki point of view. They can be just one, but in order to catch errors, it better be split into two.

- Enable the new section in MW, this should be a NOOP, but would allow testing before going fully live.

- Add the section as described here: https://gerrit.wikimedia.org/r/#/c/operations/mediawiki-config/+/577185/

- Make sure to double check the blobs_clusterXX entry match the tables number on the future new tables.

Once the change is merged and deployed to the passive DC, use Wikimedia Debug extension to browse the site via mwdebug2001.codfw.wmnet and monitor errors. If everything looks fine, deploy on the active DC.

Use shell.php from a mwmaint host to fetch stuff from those new tables, to make sure grants and everything is fine. Getting a false is a good thing. Everything else might require further research.

root@mwmaint1002:~# mwscript shell.php --wiki=enwiki Psy Shell (PHP 7.2.26 — cli) by Justin Hileman >>> ExternalStore::fetchFromURL( 'DB://cluster26/1' ) Wikimedia/Rdbms/DBConnectionError with message 'Cannot access the database: No working replica DB server: Unknown error' >>> ExternalStore::fetchFromURL( 'DB://cluster25/1' ) => b"Ý][û~¸»¿vÇ(i╚

If everything looks fine, the next patch should be enabling that section to be writable.

https://gerrit.wikimedia.org/r/#/c/operations/mediawiki-config/+/577189/

Before fully deploying that change, test it on mwdebug. Generate some writes by writing on your talk page and checking on the new blobs_clusterXX table. It might take a few writes to be able to show up there, as it is balanced with the other existing external storage masters.

Try a few times, and a few projects to make sure the row shows up there and on all the hosts (to double check replication is working as expected).

If the writes are showing up, and nothing else is errorring, you are good to go and fully deploy to all DCs.

Once deployed, monitor for lag, errors and periodically select from the new tables to make sure it is getting new things.

- Update spicerack to get these new sections up to date: https://gerrit.wikimedia.org/r/#/c/operations/software/spicerack/+/576297/

How to set a external storage (es) section to read only

Whenever an existing external storage section needs to go read-only, these are the steps that need to be taken.

- Create a MW patch that set it to read-only and remove it from the DefaultExternalStore : https://gerrit.wikimedia.org/r/#/c/operations/mediawiki-config/+/578766/

As always, first push to the passive DC, monitor for logs and if all is ok, push to the active DC:

- Once the change is live, monitor the master binlog (skip heartbeat entries) and make sure the INSERTS stopped a few seconds after the change is live everywhere.

- Disable alerts for 10-15 minutes on the involved servers, to avoid any alerts.

- Once writes has stopped, prepare and push a puppet patch to change those hosts to standalone: https://gerrit.wikimedia.org/r/#/c/operations/puppet/+/578816/

- Set read_only=1 on the master

- Once puppet has run on the master, check that pt-heartbeat has stopped there. Once done, run show master status; to make sure nothing is being written.

- Run the following across all the hosts: show master status; show slave status\G and note those positions somewhere.

- Reset replication across all the hosts: stop slave; reset slave all

- Update tendril to hide that replication tree:

update shards set display=0 where name='esX'

- Update zarcillo to reflect those hosts as standalone

update sections set standalone=1 where name='esX';

- Slowly give some weight to the old masters, and now standalone - probably best done in a few commits, to slowly warm their buffer pool

dbctl instance esXXXX edit dbctl config commit -m "Slowly give weight to old and now standalone es masters TXXXXXX"

dbctl at the moment doesn't treat standalone hosts any different from a normal replicating section. So it will be showed normally on either db-eqiad.json and db-codfw.json even though there is no real master anymore but just standalone hosts.

- Update Spicerack to reflect that these new sections are RO https://gerrit.wikimedia.org/r/#/c/operations/software/spicerack/+/576297/

IPv6 and MariaDB

MariaDB (and MySQL) should have good support of IPv6. However, due to account handling being based on IP addresses, not DNS, a lot of issues, specially with authentication and grants can arise:

- Hosts using DNS will try to contact mysqld using IPv6 first. That will cause timeouts (of several seconds) until it fails back to IPv4

- Privileges may fail completely (access denied):

- When setting up and performing mysql backups

- When accessing host from remote mysql root clients (e.g. cumin)

- When using replication

- When using watchdog user for tendril

db1108, as of July 2020, is the only host configured using IPv6 while holding a mysql service.

Tables drift between code and production

There are known differences between tables definitions in MW and the live version in production. We have historically worked hard to the most important ones, but there are still lots minor ones that need to be solved.

There is a dashboard where those can be checked/observed: https://drift-tracker.toolforge.org/report/core/

Warming up production databases

This is mostly done before a DC switchover so the databases are not totally cold (aka with nothing cached).

We do this in three different ways:

MW warmup script

From a mw maintenance host make sure you have a urls-cluster.txt file:

root@mwmaint1002:/home/marostegui# cat urls-cluster.txt # Purpose: Root redirect https://%server/ # Purpose: Main Page, Skin cache, Sidebar cache, Localisation cache https://%server/wiki/Main_Page # Purpose: MobileFrontend, Main Page https://%mobileServer/wiki/Main_Page # Purpose: Login page https://%server/wiki/Special:UserLogin # Purpose: API, Recent changes https://%server/w/api.php?format=json&action=query&list=recentchanges

If you want to warm up eqiad, simply run:

nodejs /var/lib/mediawiki-cache-warmup/warmup.js urls-cluster.txt spread appservers.svc.eqiad.wmnet

Normally you might want to run this several times for hours to make sure you touch as many wikis and hosts as possible. In the output of the script you'll be able to see how the loading times start to decrease over time.

This script is pretty harmless and be run without much babysitting in a loop:

while true; do nodejs /var/lib/mediawiki-cache-warmup/warmup.js urls-cluster.txt spread appservers.svc.eqiad.wmnet ; sleep 15; done

Comparing tables

Using db-compare over several is a good way to bring some of the most accessed tables into memory. This is a very gentle script and can be run on the background for hours/days without many issues.

The idea here is to check data consistency between DCs and bring data into the buffer pool. We have a file with the most important tables to be checked/warmed up, which is called tables_to_check.txt and lives at: https://raw.githubusercontent.com/wikimedia/operations-software/master/dbtools/tables_to_check.txt

The content:

actor actor_id archive ar_id change_tag ct_id comment comment_id content content_id logging log_id pagelinks pl_from page page_id revision rev_id revision_actor_temp revactor_rev revision_comment_temp revcomment_rev slots slot_revision_id text old_id user user_id watchlist wl_id

What we normally do is to iterate over all the databases on a given section and go through all the tables across all the wikis there. The idea is to select all the production hosts on the passive DC and compare them against the vslow,dump host on the active DC.

The list of hosts per section can be retrieved from zarcillo database, orchestrator or with the section tool: The list of databases per section can be retrieved from the sX.dblist files present on wmf-config repo

Once those things are ready, we can simply iterate over all the tables with a loop (example of enwiki (s1):

cat git/mediawiki-config/dblists/s1.dblist | grep -v "#" | while read db; do cat tables_to_check.txt | while read table index; do echo "$db.$table"; db-compare $db $table $index db2146.codfw.wmnet:3306 db1163 db1099:3311 db1105:3311 db1106 db1118 db1119 db1134 db1135 db1164 db1169 db1184 || break 2; done ; done

If differences are found, they need to be investigated BEFORE giving greenlight for the DC switchover.

Warming up ES hosts with compare

External store hosts are different as they only have one table per wiki, and depending on the section it is name differently, they are all called blobs_clusterX.

In Sept 2021 this is the mapping: Read only sections: es1 blob_cluster1 until blob_cluster23 es2 blob_cluster24 es3 blob_cluster5

Writable sections: es4 blob_cluster26 es5 blob_cluster27

This comparison isn't trustable for data drifts as the PK is a blob_id but can still be used to warm up things. In this case, the iteration follow the same pattern as a normal one, you simply need to check the same table across all the databases existing on the hosts, that can be done with the following loop (as data drift isn't to be trusted, no need to compare against the active DC, so just comparing them within the passive DC):

for i in `mysql.py -hes1023 -e "show databases" -BN`; do db-compare $i blobs_cluster27 blob_id es1023 es1024 es1025; done

Warming up parsercache hosts

For parsercache hosts, the easiest way is to simply do a table count on all its tables.

for i in `mysql.py -hpc1011 parsercache -BN -e "show tables"`; do echo $i; mysql.py -hpc1012 parsercache -e "select count(*) from $i"; mysql.py -hpc1011 parsercache -e "select count(*) from $i";mysql.py -hpc1013 parsercache -e "select count(*) from $i"; done

Warming up x1 hosts

Hosts in x1 have all the wikis but only a few tables per wiki and we mostly only warm up the echo tables. The databases present on x1 are at echo.dblists on the mediawiki-config repo: https://github.com/wikimedia/operations-mediawiki-config/blob/master/dblists/echo.dblist

The tables to check file is present at: https://raw.githubusercontent.com/wikimedia/operations-software/master/dbtools/echo_tables_to_check.txt

This is the content on Sept 2021:

echo_event event_id echo_notification notification_event echo_target_page etp_page

The check can be performed with a simple loop:

cat git/mediawiki-config/dblists/echo.dblist | grep -v "#" | while read db; do cat echo_tables_to_check.txt | while read table index; do echo "$db.$table"; db-compare $db $table $index db2096.codfw.wmnet db1103 db1120 db1137; done; done

Replaying traffic

Probably the most effective way to warm up tables, but the most time consuming one and definitely dangerous. So proceed with caution.

Another way to warm up more concrete tables and hosts is to replay some of the most common/slow queries that are arriving to the active DC and send them to the passive DC. This requires a bit more manual work but this is a high level way of doing it.

Which queries to replay

Live traffic

If you are not 100% sure of what you are doing, do not proceed with this warm up method. YOU COULD CORRUPT DATA

On the passive DC, you can enable slow query log for a few seconds using the following commands. It highly depends on the day the kind of queries you can grab, but in general you cannot really reuse them as some of them have specific timestamps (especially recentchanges).

First, make sure you are using FILE as a way to log queries and which file is it:

> SHOW VARIABLES LIKE "general_log%"; +------------------+------------+ | Variable_name | Value | +------------------+------------+ | general_log | OFF | | general_log_file | db1169.log | +------------------+------------+

Now you are ready to enable, capture half a minute or a minute of traffic and then disable it:

SET global general_log = 1; select sleep (30); SET global general_log = 0;

Keep in mind that if forgotten, this file can grow to huge values, so always try to use the enable and disable on the same command so you don't forget to disable it. Unfortunately this captures all queries and not only SELECTs (as log_disabled_statements isn't dynamic)

Once you've got the file, you need to exclude INSERT DELETE UPDATE and ONLY leave SELECTs statements otherwise YOU COULD CORRUPT DATA

If you are not 100% sure of what you are doing, do not proceed with this warm up method.

Once the file only has SELECTs, you can replay them to all the hosts you want to warm up

This page is a part of the SRE Data Persistence technical documentation

(go here for a list of all our pages)