Zotero

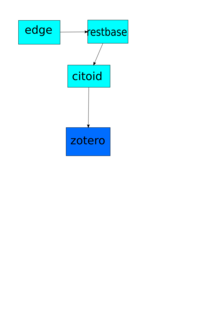

Zotero is a Node.js service which is run alongside the Citoid service.

Updating

See: mw:Citoid/Updating Zotero

Deployment

These are directions for deploying zotero, a Node.js service. More detailed but more general directions for nodejs services are at Migrating_from_scap-helm#Code_deployment/configuration_changes.

Locate build candidate

From gitlab, locate the candidate build. https://gitlab.wikimedia.org/repos/mediawiki/services/zotero/-/jobs

You can also check the docker registry, but this is slow to update so may not contain the latest build: https://docker-registry.wikimedia.org/repos/mediawiki/services/zotero/tags/

The name of the build will look something like '2024-11-08-122812-production'.

Add change via gerrit

- Clone deployment-charts repo.

- vi values.yaml

main_app: image: repos/mediawiki/services/zotero limits: cpu: 10 memory: 4Gi liveness_probe: tcpSocket: port: 1969 port: 1969 requests: cpu: 200m memory: 200Mi version: 2024-11-08-122812-change-me-production

- Make a CR to change the version value in values.yaml, and after a successful review, merge it. Staging.yaml and codfw.yaml will inherit the values from values.yaml.

- After merge, log into a deployment server, there is a cronjob (1 minute) that will update the /srv/deployment-charts directory with the contents from git.

Log into deployment server

Ssh into the deploy machine.

ssh deployment.eqiad.wmnet

Navigate to /srv/deployment-charts/helmfile.d/services/

Zotero runs in both of the core data centers: Eqiad and Codfw. There is also a staging server. You can test out changes on the staging server first.

Staging server

cd /srv/deployment-charts/helmfile.d/services/zotero

helmfile -e staging -i apply

Helfile apply (may take awhile)

This checks status again so you can see if all instances have been restarted yet.

Verify Zotero is running on staging with a curl request:

curl -k -d 'https://en.wikipedia.org/wiki/Darth_Vader' -H 'Content-Type: text/plain' https://staging.svc.eqiad.wmnet:4969/web

curl -d '9791029801297' -H 'Content-Type: text/plain' https://staging.svc.eqiad.wmnet:4969/search

Production server

helmfile -e codfw -i apply

repeat for eqiad

helmfile -e eqiad -i apply

eqiad queries

curl -k -d '9791029801297' -H 'Content-Type: text/plain' https://zotero.svc.eqiad.wmnet:4969/search

curl -k -d 'https://en.wikipedia.org/wiki/Darth_Vader' -H 'Content-Type: text/plain' https://zotero.svc.eqiad.wmnet:4969/web

curl -k -d 'https://www.example.com' -H 'Content-Type: text/plain' https://zotero.svc.eqiad.wmnet:4969/web

Verify

Verify Zotero is running with a curl request:

curl -k -d 'https://en.wikipedia.org/wiki/Darth_Vader' -H 'Content-Type: text/plain' https://zotero.svc.codfw.wmnet:4969/web

curl -k -d 'https://en.wikipedia.org/wiki/Darth_Vader' -H 'Content-Type: text/plain' https://zotero.svc.eqiad.wmnet:4969/web

curl -d 'https://en.wikipedia.org/wiki/Darth_Vader' -H 'Content-Type: text/plain' https://zotero.discovery.wmnet:4969/web

curl -d '9791029801297' -H 'Content-Type: text/plain' https://zotero.discovery.wmnet:4969/search

Logs

Logs have been disabled in 827891af due to them being actively harmful (aside from useless) to our environment. If you need to chase down a request that caused an issue, Citoid logs might be helpful as, aside from monitoring, Citoid is the only service talking to zotero. Citoid logs are in Logstash

Monitoring

There is a probe which uses the export endpoint to check if Zotero is alive:

These alerts can be seen on logstash for AlertManager filtering for labels:instance: zotero:4969

The citoid swagger probe also includes a test of Zotero:

Envoy telemetry also includes Zotero:

Rolling back changes

If you need to roll back a change because something went wrong:

- Revert the git commit to the deployment-charts repo

- Merge the revert (with review if needed)

- Wait one minute for the cron job to pull the change to the deployment server

- execute

ENV=<staging,eqiad,codfw> kube_env zotero $ENV; helmfile -e $ENV diffto see what you'll be changing - execute

helmfile -e $ENV apply

Rolling restart

A rolling restart of Zotero is sometimes necessary to resolve production issues. To run a rolling restart, run this on the deployment host, where $ENV is one of staging, eqiad, or codfw:

helmfile -e ${ENV?} -f /srv/deployment-charts/helmfile.d/services/zotero/helmfile.yaml --state-values-set roll_restart=1 sync

Rolling back in an emergency

If you can't wait the one minute, or the cron job to update from git fails etc. then it is possible to manually roll back using helm.

- Find the revision to roll back to

kube_env zotero <staging,eqiad,codfw>; helm history <production> --tiller-namespace YOUR_SERVICE_NAMESPACE- Find the revision to roll back to

- e.g. perhaps the penultimate one

REVISION UPDATED STATUS CHART DESCRIPTION 1 Tue Jun 18 08:39:20 2019 SUPERSEDED termbox-0.0.2 Install complete 2 Wed Jun 19 08:20:42 2019 SUPERSEDED termbox-0.0.3 Upgrade complete 3 Wed Jun 19 10:33:34 2019 SUPERSEDED termbox-0.0.3 Upgrade complete 4 Tue Jul 9 14:21:39 2019 SUPERSEDED termbox-0.0.3 Upgrade complete

- Rollback with:

kube_env zotero <staging,eqiad,codfw>; helm rollback <production> 3 --tiller-namespace YOUR_SERVICE_NAMESPACE