External storage

The External Storage (ES) hosts are used to store the compressed text content of wiki page revisions in MariaDB databases. When a user asks for a page or a diff to an older version of a page, Mediawiki grabs the compressed content from the external store database and uses it to display the user's request. Compressed text is stored in a number of different formats.

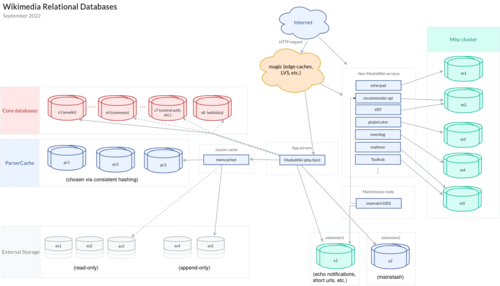

ES data is sharded into multiple logical clusters, each of which (other than the oldest one) is named cluster##. Logical clusters are the cluster names stored in MediaWiki database. Multiple logical clusters can lived in one physical cluster (named es#), which is a set of hosts (i.e. one master in each two regions and several replicas). The mapping between logical clusters and physical clusters is stored in etcd.php. Originally new physical cluster are added when older ones are out of disk space. When new servers with larger disk spaces are commissioned, multiple logical clusters may be merged into one physical cluster, where logical clusters themselves remain stable. In addition, one physical cluster may preemptively include multiple logical clusters, since smaller tables are easier to backup.

The Echo extension uses External Storage to store notifications. The Flow extension uses External Storage to store the Parsoid HTML of users' posts. They use the 'extension1' logical cluster in production as of 2014-07, see InitialiseSettings.php. The AbuseFilter extension uses ExternalStore as well.

Servers

The ES hosts are named es#. Eqiad hosts are numbered >=1000. Codfw hosts are numbered >=2000 They are all Dell R740xd class machines with 12 2TB disks. Configured with RAID10, they have ~12TB available disk.

For a list of which servers are currently active serving which physical cluster, see eqiad.json and codfw.json

The ES servers run MariaDB and use standard MariaDB replication to maintain consistent datasets. The replication topology is described in the image on the right - each colo has flat replication within the colo with one host per colo doing inter-colo replication. Of course this statement should be treated as immediately out of date and verified by querying the MariaDB servers directly.

Reading db.php

The default table name for an ES host is 'blobs'. the templateOverridesByCluster section of db.php allows you to change that. Because many of the logical clusters are coresident on the same physical cluster, most of them are overridden.

Nagios, Monitoring, and Health

Nagios

Nagios watches a few things on the ES hosts:

- stardards: ping, ssh, disk, etc.

- mysql: replication lag, master write status

Here are responses to some potential nagios alerts:

- Host down

- if it's a slave depool it:

dbctl instance esXXXX depool dbctl config commit -m "Depool esXXXX"

- if it's the master, promote one of the other slaves in the same DC to be a new master see: Emergency failover

- Disk full

- verify whether it's / or /srv/ that is full

- delete replication logs, old backups, etc.

- Call a DBA

- if either / or /srv/ reaches 100%, the database will need a reslave

- Replication fallen behind or stopped

- Do nothing - mediawiki will remove the host from rotation on its own

- check RAID for degraded disks

- wait and see if it gets better on its own

- if it doesn't get better on its own call a DBA

- RAID

- Go figure out which disk has failed Raid and MegaCli, put in an RT ticket to replace it.

Health

Other generic commands to check the health of the databases

- show slave status \G

- look for the following 3 lines

- Slave_IO_Running: Yes

- Slave_SQL_Running: Yes

- Seconds_Behind_Master: 0

Switching Icinga to OK read only

This change is a good example of an Icinga patch that will allow for a set of hosts to be read only - needed for those sections which are standalone

Backups and Snapshots

See Backups

Database Schema

The ES hosts have tables named blobs or blobs_cluster#. The schema is more or less the same: two columns, an ID (autoincrementing) and a blob store that contains the gzipped text.

CREATE TABLE `blobs` ( `blob_id` int(10) unsigned NOT NULL AUTO_INCREMENT, `blob_text` longblob, PRIMARY KEY (`blob_id`) ) ENGINE=MyISAM

In the main database schema, you get to the external store from the old_text column of the text table. It contains either the actual text for the page (for very few very old pages) or a pointer into the external store databases. The pointer looks like one of these:

DB://<clustername>/<integer> DB://<clustername>/<integer>/<integer>

clustername is resolved in db.php. There you find the hostname(s) of servers that have the content you're looking for, in addition to the table name.

Path in the database from a page name to its text (using http://en.wikipedia.org/wiki/Defenestration as my example):

select page_latest as rev_id from page where page_namespace=0 and page_title='Defenestration'; select rev_text_id as old_id from revision where rev_id=$rev_id select old_text from text where old_id=$old_id

or, put together into one query:

select text.old_text from page, revision, text

where page.page_namespace=0 and page.page_title='Defenestration'

and page.page_latest = revision.rev_id

and revision.rev_text_id = text.old_id;

OK, going back the other direction... In order to find a list of pages that exist on a specific cluster: (warning these are not efficient queries.)

select old_id as rev_id from text where old_text like 'DB://cluster6%' order by old_id desc limit 5; select rev_page as page_id from revision where rev_text_id=$rev_id; select page_title from page where page_id=$page_id;

or, put together as one query: select 20 pages from cluster 6 (warning this query is slow.):

select page.page_title, revision.rev_id, text.old_text from text,revision,page

where text.old_text like 'DB://cluster6%'

and text.old_id = revision.rev_text_id

and page.page_id = revision.rev_page

order by page.page_id limit 20;

These pages can be loaded in a browser with the rev_id alone with a URL like http://sv.wikipedia.org/w/index.php?oldid=2336620

See also

- Text storage data

- mw:Manual:External Storage

- There's interesting stuff on the page tracking the project to bring the ES service up to date, that ran from 2011-08 to 2011-11.