Wikidata Query Service

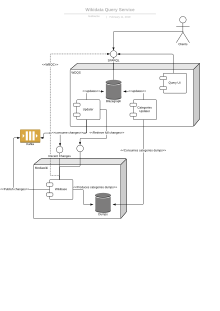

Wikidata Query Service is the Wikimedia implementation of SPARQL server, based on the Blazegraph engine, to service queries for Wikidata and other data sets. Please see more detailed description in the User Manual.

More information on technical interactions with Query Services is available.

Development environment

You will need Java 8 / JDK 8 and Maven. Consider use of an Ubuntu 22 or a Debian 11 Bullseye virtual machine, or possibly a Windows Subsystem for Linux setup (e.g., Ubuntu 22 WSL), if you don't already run one of these operating systems as your host OS.

If you are running Ubuntu 22, you should be able to find the JDK with apt-cache search openjdk-8, then install with sudo apt-get install <package name>.

If you are running Debian 11 Bullseye, where Java 8 isn't normally readily available, you may be able to use the Wikimedia APT external access and security instructions, and you should be able to install the following packages with dpkg -i <.deb file>.

Most likely, the following commands can be used to point at the correct Java 8 / JDK 8 binaries for your interaction with the WDQS software.

$ sudo update-alternatives --config java

$ sudo update-alternatives --config javac

You can run those commands again to change your Java / JDK environment as needed for other projects.

If the tips above don't work, consider trying an installer from https://www.oracle.com/technetwork/java/javase/downloads/jdk8-downloads-2133151.html.

To install Maven run sudo apt-get install maven. The installation on Ubuntu 22 and Debian 11 usually works just fine.

Code

The source code is in Gerrit project wikidata/query/rdf. In order to start working on Wikidata Query Service codebase, clone this repository:

git clone https://gerrit.wikimedia.org/r/wikidata/query/rdf

or GitHub mirror:

git clone https://github.com/wikimedia/wikidata-query-rdf.git

or if you want to push changes and have a Gerrit account:

git clone ssh://<your_username>@gerrit.wikimedia.org:29418/wikidata/query/rdf

Build

Then you can build the distribution package by running:

cd wikidata-query-rdf ./mvnw package

and the package will be in the dist/target directory. Or, to run Blazegraph service from the development environment (e.g. for testing) use:

bash war/runBlazegraph.sh

Add "-d" option to run it in debug mode. If your build is failing cause your version of maven is a different one you can:

mvn package -Denforcer.skip=true

In order to run Updater, use:

bash tools/runUpdate.sh

The build relies on Blazegraph packages which are stored in Archiva, and the source is in wikidata/query/blazegraph gerrit repository. See instructions on Mediawiki for the case where dependencies need to be rebuilt.

See also documentation in the source for more instructions.

SPARQL endpoint is available under /sparql path, which internally redirects to /bigdata/namespace/wdq/sparql.

Build Blazegraph

If there are changes needed to Blazegraph source, they should be checked into wikidata/query/blazegraph repo. After that, the new Blazegraph sub-version should be built and WDQS should switch to using it. The procedure to follow:

- Commit fixes (watch for extra whitespace changes!)

- Update

README.wmfwith descriptions of which changes were done against mainstream - Blazegraph source in master branch will be on snapshot version, e.g.

2.1.5-wmf.4-SNAPSHOT- set it to non-snapshot:mvn versions:set -DnewVersion=2.1.5-wmf.4 - Make local build:

mvn clean; bash scripts/mavenInstall.sh; mvn -f bigdata-war/pom.xml install -DskipTests=true - Switch Blazegraph version in maim pom.xml of WDQS repo to

2.1.5-wmf.4(do not push it yet!). Build and verify everything works as intended. - Commit the version change in Blazegraph, push it to the main repo. Tag it with the same version and push the tag too.

- Run to deploy:

mvn -f pom.xml -P deploy-archiva deploy -P Development; mvn -f bigdata-war/pom.xml -P deploy-archiva deploy -P Development; mvn -f blazegraph-war/pom.xml -P deploy-archiva deploy -P Development - Commit the version change in WDQS, and push to gerrit. Ensure the tests pass (this would also ensure Blazegraph deployment to Archiva worked properly).

- After merging the WDQS change, follow the procedure below to deploy new WDQS version.

- Bump Blazegraph master version back to snapshot -

mvn versions:set -DnewVersion=2.1.5-wmf.5-SNAPSHOT- and commit/push it.

Administration

Hardware

We're currently running on the following servers:

- public cluster, eqiad:

wdqs1004,wdqs1005,wdqs1006,wdqs1007,wdqs1012,wdqs1013 - public cluster, codfw:

wdqs2001,wdqs2002,wdqs2003,wdqs2004,wdqs2007 - internal cluster, eqiad:

wdqs1003,wdqs1008,wdqs1011 - internal cluster, codfw:

wdqs2005,wdqs2006,wdqs2008

These clusters are in active/active mode (traffic is sent to both), but due to how we route traffic with GeoDNS, the primary cluster (usually eqiad) sees most of the traffic.

Server specs are similar to the following:

- CPU: dual Intel(R) Xeon(R) CPU E5-2620 v3

- Disk: 1600GB raw raided space SSD

- RAM: 128GB

Monitoring

Grafana dashboard: https://grafana.wikimedia.org/d/000000489/wikidata-query-service

Grafana frontend dashboard: https://grafana.wikimedia.org/d/000000522/wikidata-query-service-frontend

I've got a new WDQS host, how do I get it ready for production?

This section explains how to go from a racked server with an OS, to a production WDQS server.

Create and Commit Puppet code

When a server is racked by DC Ops, they will add it to the Puppet repo's site.pp. See the Server Lifecycle page for more details on DC Ops' process.

Apply puppet role (Puppet patch 1)

Once DC Ops hands over the server, our first step is to move the server into one of the available wdqs puppet roles. As of this writing, the roles are wdqs::internal, wdqs::public, and wdqs::test , but you can see an up-to-date list by searching for 'wdqs' in the Puppet repo's site.pp file.

Suppress Alerts (Puppet patch 2)

Until the host is ready, you can suppress alerts by setting the hiera key profile::query_service::blazegraph::monitoring_enabled: false . See this patch for an example.

Once these change are merged, run puppet agent on the host and it should be close to being ready.

Manual Steps (Likely to Change Frequently)

Once Puppet runs, there are a few manual steps required. These are temporary and subject to change. See this Phab ticket for more context .

- Remove /srv/deployment/wdqs/* and do a forced scap deploy

More details on why at T342162.

- Run data-transfer cookbook

Needed to get the actual Blazegraph data onto the server. As of this writing, the cookbook takes about 90 minutes to transfer all data ~(1.2TB) from another host in the same DC

Monitor lag using the WDQS dashboard . If it's not dropping, check the updater service again.

- Manually start the load-dcatap-weekly.service

Additional context at T342361

Lastly,

- Remove the alert suppression you configured in "Puppet patch 2".

- Wait 30 minutes and confirm no alerts in AlertManager/Icinga.

Application Deployment

Sources

The source code is in the Gerrit project wikidata/query/rdf (GitHub mirror).

The GUI source code is Gerrit project wikidata/query/gui (GitHub mirror), which is also a submodule of the main project.

The deployment version of the query service is in the Gerrit project wikidata/query/deploy,

with the deployment version of the GUI, wikidata/query/gui-deploy (production branch), as a submodule.

Labs Deployment

Note that currently deployment is via git-fat (see below) which may require some manual steps after checkout. This can be done as follows:

- Check out

wikidata/query/deployrepository and updateguisubmodule to currentproductionbranch (git submodule update). - Run

git-fat pullto instantiate the binaries if necessary. - rsync the files to deploy directory (

/srv/wdqs/blazegraph)

Use role role::wdqs::labs for installing WDQS. You may also want to enable role::labs::lvm::srv to provide adequate diskspace in /srv.

Command sequence for manual install:

git clone https://gerrit.wikimedia.org/r/wikidata/query/deploy cd deploy git fat init git fat pull git submodule init git submodule update sudo rsync -av --exclude .git\* --exclude scap --delete . /srv/wdqs/blazegraph

Production Deployment

Production deployment is done via git deployment repository wikidata/query/deploy. The procedure is as follows:

Initial Preparation

Preferred option: from Jenkins

- Log into Jenkins

- Go to https://integration.wikimedia.org/ci/job/wikidata-query-rdf-maven-release-wdqs/

- Select build with parameters

Note: If the job fails, the archiva credentials could have changed, see here: https://wikitech.wikimedia.org/wiki/Analytics/Systems/Cluster/Deploy/Refinery-source#Changing_the_archiva-ci_password

Fallback option: from your own machine

./mvnw -Pdeploy-archiva release:preparein the source repository which updates the version numbers. If your system username is different that the one in scm, use-Dusername=...option../mvnw -Pdeploy-archiva release:performin the source repository - this deploys the artifacts to archiva.

Note that for the above you will need repositories archiva.releases and archiva.snapshots configured in ~/.m2/settings.xml with archiva username/password. You will also need to have a gpg key setup.

Further required preparation

Prepare and submit the patch containing the new code updates:

- Run

deploy-prepare.sh <target version>script - it will create a commit with newest version of jars. - Using the commit generated by the above, open up a patch, and get it approved and merged.

Test that the service is working as expected before we mutate it with a deploy, so that we can compare properly:

- Open up a tunnel to the current wdqs canary instance via

ssh -L 9999:localhost:80 wdqs1003.eqiad.wmnet(check that wdqs1003 is still the canary)- Canary is defined in the deploy repo within

scap/scap.cfgas the wdqs-canary dsh group. - This dsh group is defined within the same repo in the file

scap/wdqs-canarywith one host per line.

- Canary is defined in the deploy repo within

- Run the test script through the tunnel: from the

rdfrepo, runcd queries && ./test.sh -s http://localhost:9999/ - You may also want to navigate to http://localhost:9999 and run an example query.

Now we're ready for the actual deploy!

The actual code deploy

On the deployment server (deployment.eqiad.wmnet currently), cd /srv/deployment/wdqs/wdqs. First we'll get the repo into the desired state, then do the actual deploy.

- First

git fetch, then glance atgit log HEAD...origin/masterand manually verify the expected commits are there, thengit rebase && git fat pull - Now that the repo is in the desired state, sanity check with

ls -lahthat you see the.warfile and that it doesn't seem absurdly small - Use

scap deploy '<the latest version number>'to deploy the new build. After canary deployment will be done (scap will ask for confirmation to proceed), please test the service. You can do that by ssh tunneling access to

wdqs1003.eqiad.wmnet and running ./tests.sh -s localhost:<tunneled_port> script from `queries` subdirectory

- Validation #1: In a separate pane or tab, navigate to

/srv/deployment/wdqs/wdqsand run/look atscap deploy-log - Validation #2: In yet another separate pane, tail the logs on the wdqs canary via

tail -f /var/log/wdqs/wdqs-updater.log -f /var/log/wdqs/wdqs-blazegraph.log(Note we're tailing two log files at once so results will be interleaved) - Once the canary deployment looks good, proceed to the rest of the fleet by pressing

c

Post code-deploy operational steps

These steps have been separated into a separate subsection from the actual code deploy, but these steps are a mandatory part of the deploy, just to be clear.

- Restart

wdqs-updaterafter the deployment is complete:sudo -E cumin -b 4 'A:wdqs-all' 'systemctl restart wdqs-updater'(Note that wdqs-updater can be safely restarted without user impact) - Restart

wdqs-categoriesone in-service node at a time (restarting it on a pooled node impacts users and thus requires depooling), like so:sudo -E cumin -b 1 'A:wdqs-all and not A:wdqs-test' 'depool && sleep 45 && systemctl restart wdqs-categories && sleep 45 && pool' - Restart the remaining wdqs-test nodes, which aren't pooled and therefore we can't use the above command:

sudo -E cumin 'A:wdqs-test' 'systemctl restart wdqs-categories' - Run a basic test query on query.wikidata.org

- Check icinga - wdqs to verify there's no new warning/critical/unknown, and also check grafana to make sure everything looks good.

- Verify the commit hash for the new deploy matches the correct commit hash from the deploy repo:

sudo -E cumin -b 4 'A:wdqs-all' 'ls -ld /srv/deployment/wdqs/wdqs'expected output:/srv/deployment/wdqs/wdqs-cache/revs/${COMMIT_HASH}

General puppet note (nothing to do here for a deploy): The puppet role that needs to be enabled for the service is role::wdqs.

It is recommended to test deployment checkout on beta before deploying it in production.

The test script is located at rdf/query/test.sh.

GUI deployment general notes

GUI deployment files are in repository wikidata/query/gui-deploy branch production. The GUI is deployed as a microsite.

A new deployment GUI version can be built by WDQSGuiBuilder from the latest master in the wikidata/query/gui repo by issuing a rebuild of the wikidata-query-gui-build Jenkins job; after the change has been uploaded (query), it needs to be manually +2ed (both “code review” and “verified”) and submitted. You can also run grunt deploy in the GUI directory to generate such a patch by hand (which still needs to be merged manually). Either way, after the gui-deploy repo has been updated, the microsite should update itself with the next Puppet run (after at most 30 minutes as of 2022-02-10).

Data reload procedure (SRE-level access required)

Warning

Reloading data is a time-consuming (~17 days) and fragile process. When possible, transfer data instead of reloading. As of this writing, it is not possible to complete a reload from codfw due to NFS issues, so be sure to use eqiad datacenter only. You can then use the data transfer process to send the data from eqiad to codfw.

Reloading

Ensure clouddumps1001.wikimedia.org is mounted via NFS on the target host

Eventually we will move to rsync, but for now, you'll have to update puppet to mount the clouddumps hosts via NFS on your target server. Example PRs for mounting and opening the software firewall . You can combine your changes as a single PR, we just screwed it up on those two ;) .

Run the data-reload cookbook

Use the data-reload cookbook from cumin. Note that the process takes around 17 days to complete. Bugs in Blazegraph can cause the reload to corrupt itself. If that happens, you have to start the process over again.

Manual Process

The manual process is not used anymore, but is documented in this page's history just in case.

Data transfer procedure

Transferring data from between nodes is typically faster than recovering from a dump. The port 9876 is opened between the wdqs nodes of the same cluster for that purpose. Across different clusters, that port needs to be opened manually (and closed after the opertation). The procedure is automated in a cookbook (text in BOLD needs to be adapted):

- on the destination node, open a port if needed:

sudo iptables -A INPUT -p tcp -s wdqs1010.eqiad.wmnet --dport 9876 -j ACCEPT - copy the main data:

sudo -i cookbook sre.wdqs.data-transfer --source wdqs1010.eqiad.wmnet --dest wdqs2007.codfw.wmnet --without-lvs --blazegraph_instance blazegraph --reason "reloading data from wdqs1010 to wdqs2007 - some reason" --task-id T246343 - copy the categories data:

sudo -i cookbook sre.wdqs.data-transfer --source wdqs1010.eqiad.wmnet --dest wdqs2007.codfw.wmnet --without-lvs --blazegraph_instance categories --reason "reloading data from wdqs1010 to wdqs2007 - some reason" --task-id T246343 - on the destination node, close the port if needed:

sudo iptables -D INPUT -p tcp -s wdqs1010.eqiad.wmnet --dport 9876 -j ACCEPT

Note:

By default the cookbook will depool / pool nodes automatically. The --without-lvs argument prevents this. This affects both source and destination, so in the case where the source is not behind LVS but the destination is, depooling / pooling must be done manually.

Potential improvements to the cookbook:

- open and close ports automatically

- iterate over multiple destination servers

- iterate over multiple data files (blazegraph / categories)

- supports different depooling strategies for source and destination

- wait for server to catchup on lag before repooling / terminating the cookbook

Updating federation allowlist

- Add endpoint to

allowlist.txtinpuppetrepo. Commit & push to the repo. - Run puppet agent on WQDS hosts; this will pull down the new allowlist file.

- Run the WDQS restart cookbook to activate the changes.

- After deploy, document on https://www.mediawiki.org/wiki/Wikidata_Query_Service/User_Manual/SPARQL_Federation_endpoints

- Update https://www.wikidata.org/wiki/Wikidata:SPARQL_query_service/Copyright

- If it was requested on https://www.wikidata.org/wiki/Wikidata:SPARQL_federation_input, move request to archive

- Check https://www.wikidata.org/wiki/Wikidata:SPARQL_query_service/Federation_report for signs of trouble periodically.

Manually updating entities

It is possible to update single entity or a number of entities on each server, in case data gets out of sync. The command to do it is:

cd /srv/deployment/wdqs/wdqs; bash runUpdate.sh -n wdq -N -S -- -b 500 --ids Q1234 Q5678 ...

In order to do it on all servers at once, commands like pssh can be used:

pssh -t 0 -p 20 -P -o logs -e elogs -H "$SERVERS" "cd /srv/deployment/wdqs/wdqs; bash runUpdate.sh -n wdq -N -S -- -b 500 --ids $*"

Where $SERVERS would contain the list of servers updated. Note that since it is done via command line, updating larger batches of IDs will need some scripting to split them into manageable chunks. Doing bigger updates at moderate pace, with pauses to not interfere with regular updates, is recommended.

Updating IDs by timeframe

Sometimes, due to some malfunction, a segment of updates for certain time period gets lost. If it's a recent segment, updater can be reset to start with certain timestamp by using --start TIMESTAMP --init (you have to shut down regular updater, reset the timestamp, and then start it again). If the missed segment is in the past, the best way is to fetch IDs that were updated in that time period, using Wikidata recentchanges API, and then update these IDs as described above.

Example of such script can be found here: https://phabricator.wikimedia.org/P8919. The output should be filtered and duplicates removed, then fed to a script calling to update script as per above.

Updating value or reference nodes

Since value (wdv:hash) and reference (wdref:hash) nodes are supposed to be immutable,

they will not be touched by updates to items that use them.

To fix these nodes, you need to delete all triples with these nodes as the subject (SPARQL DELETE through production access),

then trigger an update (as above) for items which reference these nodes (so they will be recreated; only one item per node necessary).

Notes about running the service on non-WMF infrastructure

This project has been designed to support wikidata and commons mediainfo and thus it's not rare to stumble upon features where this assumption has been hardcoded either directly in the code or as default values. Here are few notes that may help if you are running this service for your own wikibase installation.

runUpdate.sh options

- blazegraph enpoint options:

http://blazegraph_host:port/context/namespace- -h

scheme:blazegraph_host:port: blazegraph_host:port to write to (defaults tohttp://localhost:9999) - -c

url path: the context part (defaults tobigdata) - -n

url path: the blazegraph namespace (defaults towdq)

- -h

- -t

seconds: timeout in seconds for the update in blazegraph to happen (defaults-1: to no timeout) - -s: skip site links (defaults keep site links)

- Updater specific options must be added after

--- --wikibaseUrl

wikibase_url: the wikibase URL the updater will connect to to fetch updates (defaults tohttps://www.wikidata.org/) - --conceptUri

concept uris: The URI used to identify concepts, this should be the value set inentitySourcesunderbaseUriwith one path stripped out, defaults to (http://www.wikidata.org) - --entityNamespaces

namespace ids: comma separated list of namespace ids (defaults to 0,120)

- --wikibaseUrl

Example: for a wikibase available on https://mywikibase.local/ whose config is set with something

$wgWBRepoSettings['conceptBaseUri'] = "https://myentities.local/entity/";

and using default blazegraph options:

./runUpdate.sh -- --wikibaseUrl https://mywikibase.local/ --conceptUri https://myentities.local --entityNamespaces 0,120

LDF (Linked Data Fragments) endpoint

The LDF endpoint is associated with a single WDQS host, because it has no easy way to track state between multiple hosts. The LDF endpoint's internal DNS name is "wdqs-ldf.discovery.wmnet" , which is CNAME'd to the active host via the production DNS repo . Be sure to update this record if you change the host!

Issues

Known limitations

- Data drift: The update process is imperfect, data might drift over time as updates are missed. Different servers might have slightly different data sets. This is mitigated by reloading the full data set periodically. If you identify a specific entity that has drifted, please open a Phabricator task with the entity (or list of entities) that need to be reloaded.

Scaling strategy

Wikidata_query_service/ScalingStrategy

Contacts

If you need more info, talk to anybody on the Wikimedia Search Platform team.