Dumps/Testing historical

This test suite was last updated in 2013 for Mediawiki 1.17. There is no current test suite available, although there is a rough testing environment in mw-vagrant. Should it be revived? Should energy go into mw-vagrant or into a docker image?

Access to source

To browse source use the gerrit interface.

To check out a copy as a committer: git clone ssh://<user>@gerrit.wikimedia.org:29418/operations/dumps/test.git

To check out a copy as an anonymous user: git clone https://gerrit.wikimedia.org/r/operations/dumps/test.git

Basic usage of the test suite

- Note that xmldumps-test will modify the data in the Wiki that you specify below in the configuration file. So, do not use it on live wikis. You have been warned.

- Note that xmldumps-test will modify the LocalSettings.php (although creating a backup and restoring it afterwards) of the Wiki that you specify. So, do not use it on live wikis. You have been warned again.

- Get and set up xmldumps-backup (branch ariel). (See it's xmldumps-backup's README.installation for more details)

- Get and set up xmldumps-test (branch master). (See xmldumps-test's xmldumps-test's README.installation for more details)

- Create a

test.confconfiguration file for xmldumps-test (See test.conf.example for an example)

- Run

./run_tests.shand you'll hopefully get an output like

Running test 01-Two page wiki ... ok Running test 05-text flags ... ok Running test 90-complex ... ok Running test 91-complex-with-FlaggedRevs ... ok Running test 92-complex-with-LiquidThreads ... ok Running test 93-complex-with-FlaggedRevs-and-LiquidThreads ... ok Running test 96-prefetch ... ok All 7 tests passed

- That's it.

Running the all tests (as shown above) takes ~10 minutes on a recent (2011) machine.

Instead of many small tests, the test suite on purpose comes with a few bigger tests, as each run of xmldumps-backup does on it's own take ~1 minute without much data. Do the maths on your own, if we instead went for 10000+ small isolated tests ;)

MediaWiki branches

xmldumps-tests comes with tests (tests directory), fixtures (fixtures directory), and verified dumps (verified_dumps directory) for current MediaWiki's core master branch. For older, still supported branches, xmldumps-test provides tests, fixtures, and verified dumps in the corresponding

REL1_17,REL1_18,REL1_19, andREL1_20

directories. So you can easily test against older MediaWikis using those directories.

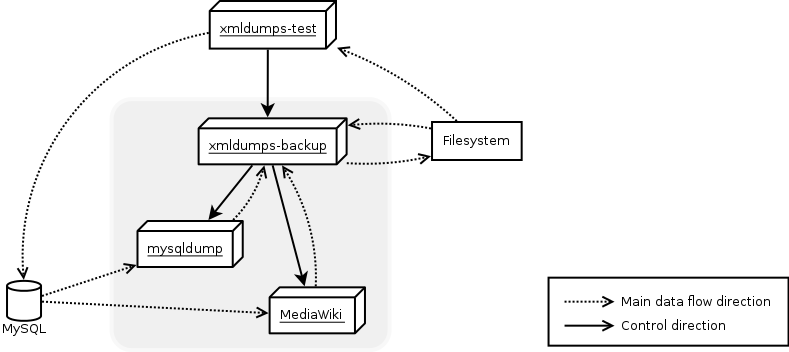

Big picture

The big picture of xmldumps-test's working is depicted in the following schematic overview:

For each test, xmldumps-tests injects data into MySQL and prepares a LocalSettings.php as well as a configuration for xmldumps-backup. Afterwards, xmldumps-backup is started, which in term orchestrates mysqldump and MediaWiki to produce the dump. In the end xmldumps-test verifies the just produced dump against a pre-verified and known-good dump.

Detailed overview

For the sake of simplicity, above's big picture omitted failure simulation and local cluster management.

- Failure simulation allows control (rate limit, close connections, ...) the MySQL connections and nfs connections.

- Local cluster management allows to automatically configure and start a local replication cluster for tests.

Failure simulation

Failure simulation allows to control both the connections to the MySQL server and also writing to the permanent storage via nfs. The big picture for simulation is depicted by the following diagram:

The left half of the picture shows MySQL failure simulation (see the additional “Tcp Tunnel”), while the right half illustrates failure simulating nfs (see the parts to the right of “Filesystem”.

Each of those failure simulation modules can be used

MySQL

Failure simulation MySQL connections revolves around tunnelling tcp connections to the MySQL server and controlling this tunnel. Thereby, we gain full control over the connection, the data and it's flow back and forth. Each connection to the server can be controlled individually. So we can for example force reconnects on the database connection for external storage, while DB_MASTER and DB_SLAVE are left unaffected.

The choice for using a tcp tunnel comes with the downside that both MediaWiki and xmldumps-backup have to connect the MySQL server through tcp. As IPC connections would bypass the tunnel, they must no longer be used. However, all necessary rerouting is managed transparently by xmldumps-test itself.

Nfs

The machines we want to test on will likely rely on nfs to access other servers. We must not interfere with those connections, but nfs does not allow to isolate connections.

However, typically no one mounts a local directory via nfs on the loopback interface.

We can exploit this fact to overcome the above limitations.

Therefore, we mount a local directory via nfs on the loopback interface and then control indirectly, by manipulating the firewall on the loopback interface for the nfs ports.

This approach adds some overhead and comes with some downsides, but is the only approach that reliably achieves the paramount goal of failure simulating nfs without affecting other nfs connections.

Local cluster management

As production uses replication clusters, we want to test against replication clusters. But typically no one cares to set one up. So xmldumps-test takes on this duty and can set up, configure, ... a replication cluster for us. In the test specification, we only have to specify how many slave nodes we want to have and the rest is handled by xmldumps-test.

- master node:

- port: 3307

- server id: 1

- Users:

| Username | Password | Description |

|---|---|---|

| root | master | Administrative user |

| $username | $password | As specified by the xmldumps-test configuration; typically in test.conf

|

| Rslave1 | Rslave1 | Replication user for node slave1 |

| ... | ... | |

| RslaveN | RslaveN | Replication user for node slaveN |

- slavei node (where i in 1,2,...):

- port: 3307+i

- server id: 1+i

- Users:

| Username | Password | Description |

|---|---|---|

| root | slavei | Administrative user |

| ... | ... |

Local clusters and failure simulation

When combining local cluster management with failure simulation, xmldumps-test automatically sets up tunnels for the relevant tcp connections and reroutes the traffic through them. The tunnel for the master node is listening on port 3307+#nodes. The tunnel for node slavei is listening on port 3307+#nodes+i. The tunnel for replication connection of node slavei is listening on port 3307+2*#nodes+i.

The following diagram depicts the tunnels and corresponding listening ports for a cluster with 3 nodes (i.e.: A master and 2 slave nodes).

Decisions

- The only MediaWiki extensions to test are LiquidThreads (although it is marked as experimental), and FlaggedRevs. Further extensions can be added at a later time, by setting up new fixtures.

ExternalStoreHttpdoes not get tested. Setting upExternalStoreHttpis simple, although testing it would require that the tested machine gets access to a http server that sends a fixed content for a fixed URL. AsExternalStoreHttpdoes not seem to be currently used at MediaWiki Wikis (according to Ariel), it was decided, we do not testExternalStoreHttp.