Data Platform/Systems/Airflow/Upgrading

We have the following procedures in place to upgrade Airflow versions.

Configure feature branch

Check out a working copy of the https://gitlab.wikimedia.org/repos/data-engineering/airflow-dags repository

Create a feature branch, for example: git checkout -b airflow_version_2_6

Determine the new version number, which is based on

- The airflow major, minor, and patch versions

- The python environment major and minor versions

- The date of creation

For example: 2.6.0-py3.10-20230510

Update conda environment

Modify the conda-environment.yml file with the new version of airflow that is to be used.

Execute ./generate_conda_environment_lock_yml.sh which will update the file generate_conda_environment_lock_yml.sh with the specific package versions in the environment.

This file may require modification before proceeding. One known error is that it removes the gitlab URLs from the workflow_utils and conda-pack packages. These modifications should be reverted.

Execute ./check_conda_environment_lock_yml.sh and verify that a conda envionment can in fact be created from the specification. Check for the output:

An environment could be created form conda-environment.lock.yml

Execute unit tests

Execute the following:

export PYTHONPATH=.:./wmf_airflow_common/plugins

tox

tox -e lint

Ensure that all tests pass

Update debian packaging parameters

Update the gitlab.ci.yaml and Dockerfile files with the new package version.

Add an entry to the debian/changelog - This can be made a little simpler with the command dch -v 2.6.0-py3.10-20230510 -D buster-wikimedia --force-distribution

Stage your changes for commit: git add gitlab-ci.yaml Dockerfile debian/changelog

Commit your chages with git commit

Build the package with GitLab CI

Push your branch back to GitLab with git push --set-upstream origin update_airflow_2_6

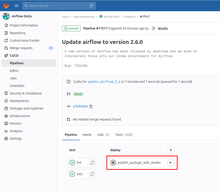

Browse to the feature branch in GitLab and locate the CI pipeline for the branch, as shown in the following image.

Click on the triangle in the publish_airflow_package_with_docker stage, with the PACKAGE_VERSION=x.y.z variable.

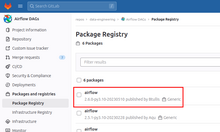

When the stage has built successfully, navigate to the Package Registry section of the repository.

Locate the package that has been built.

Copy the download link and download this file to your test host with a command like the following:

curl -o airflow-2.6.0-py3.10-20230510_amd64.deb https://gitlab.wikimedia.org/repos/data-engineering/airflow-dags/-/package_files/1257/download

[SRE Only] Install the package with sudo apt install airflow-2.6.0-py3.10-20230510_amd64.deb

Run the database check command: sudo -u analytics airflow-analytics_test db check

Run the database upgrade command: sudo -u analytics airflow-analytics_test db upgrade

Once the upgrade on the test instance has proven to work, publish the new deb to our deb repos:

root@apt1002:~# wget https://gitlab.wikimedia.org/api/v4/projects/93/packages/generic/airflow/2.9.3/airflow-2.9.3_amd64.deb

--2024-09-16 10:47:06-- https://gitlab.wikimedia.org/api/v4/projects/93/packages/generic/airflow/2.9.3/airflow-2.9.3_amd64.deb

Resolving gitlab.wikimedia.org (gitlab.wikimedia.org)... 2620:0:860:1:208:80:153:8, 208.80.153.8

Connecting to gitlab.wikimedia.org (gitlab.wikimedia.org)|2620:0:860:1:208:80:153:8|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 579378076 (553M) [application/octet-stream]

Saving to: ‘airflow-2.9.3_amd64.deb’

airflow-2.9.3_amd64.deb 100%[=========================================================================================================================================>] 552.54M 110MB/s in 5.5s

2024-09-16 10:47:12 (101 MB/s) - ‘airflow-2.9.3_amd64.deb’ saved [579378076/579378076]

root@apt1002:~# mv airflow-2.9.3_amd64.deb airflow-2.9.3-py3.10-20240916_amd64.deb

root@apt1002:~# reprepro includedeb bullseye-wikimedia `pwd`/airflow-2.9.3-py3.10-20240916_amd64.deb

Exporting indices...

root@apt1002:~# reprepro includedeb buster-wikimedia `pwd`/airflow-2.9.3-py3.10-20240916_amd64.deb

Exporting indices...

Deleting files no longer referenced...

root@apt1002:~# reprepro ls airflow

airflow | 2.9.3-py3.10-20240916 | buster-wikimedia | amd64

airflow | 2.9.3-py3.10-20240916 | bullseye-wikimedia | amd64

You then need to send a puppet patch stack to upgrade each instance individually, and a final patch to make the new version the default one (example). For each instance, after puppet has installed the new deb version, run the following commands:

sudo systemctl restart airflow-{webserver,scheduler,kerberos}@*.service

sudo -u $(sudo systemctl cat airflow-scheduler@*.service | grep User | cut -d= -f2) $(sudo systemctl cat airflow-scheduler@*.service | grep ExecStart | cut -d= -f2 | awk '{ print $1 }') db check

sudo -u $(sudo systemctl cat airflow-scheduler@*.service | grep User | cut -d= -f2) $(sudo systemctl cat airflow-scheduler@*.service | grep ExecStart | cut -d= -f2 | awk '{ print $1 }') db migrate