Data Platform/Data Lake/Traffic/BotDetection

Why do we need bot detection?

Wikipedia's content is read by humans and automated agents, which are scripts with different levels of abilities. These automated scripts (normally called 'bots') can be as complicated as a major search engine crawler that "reads" Wikipedia and indexes it all. But, they can also be as simple as someone writing a small script to scrape a couple of pages of interest. Also, we see bot vandalism: scripts that, for example, try to pull pages of sexual nature to Wikipedia's top-read list for a smaller site.

The first step to sort this traffic out is to mark the traffic requests in which bots self-identify as such via the user agent (one of the HTTP headers) using the word 'bot'. We label this kind of traffic 'spider'.

This left us with a significant amount of traffic that, while "automated" in nature, is not marked as such. The biggest problem with mislabeling the traffic is not the overall effect on the pageview metric but rather the impact on any kind of top-pageview list. For example, see the event with the United States Senate page, which appeared (in the midst of covid19) as the most visited page for English Wikipedia. For a while now, the community has been applying filtering rules for any "Top X" list that we compile[1], and we have incorporated some of these filters into our automated traffic detection.

We feel it's important to mention that we are not aiming to remove every single instance of bot traffic but rather remove the most poignant cases of automated traffic, not self-identified as such.

From our analysis (detailed below), the overall pageviews coming from users drop about 5% when we mark 'automated' traffic as such. This number gets as high as 8% in some instances but mostly lingers around 5.5%. This effect is not equally distributed across sites.

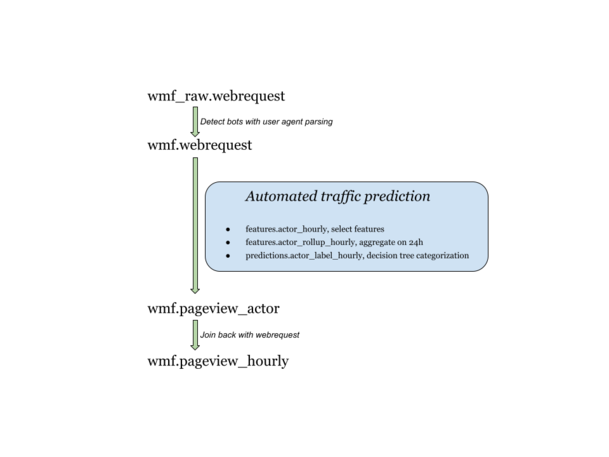

A digested version of this explanation can be found on our tech blog: Identifying "fake" traffic on Wikipedia. Also, see below a zoom of our data pipelines through the prism of automatic traffic detection.

Current state of the automated traffic detection in our pipeline

The dataset Raw Webrequest (Hive table wmf_raw.webrequest)

The raw webrequest dataset is the raw source. It includes all automated traffic.

The refine job on webrequest is creating the Webrequest dataset (Hive table wmf.webrequest)

In this HQL job we categorize the requests into 2 groups "user" and "spider", stored in the agent_type field.

For that, we study the request user agents in 2 ways:

- On one side, the user agent strings are parsed with the ua-parser collection of regexes. From it, we got information from each request about the browser, the OS, and the device. We set the traffic as automatic if the

device_family = 'Spider'. - On the other side, we match the whole user agent string against a homemade regex (e.g., we check for the inclusion of the

/bot/keyword.)

This detection was enough when the bots declared themselves properly through the user agent.

Then a job computes user activity stats (Hive table features.actor_hourly)

The notion of "actors" is loosely similar to web sessions. It is a string of interactions from a user (or a bot) with a wiki server. This HQL job is preparing a summary of each user activity during the hour. (e.g., first page loaded, how many pages, ...), and generally speaking, all necessary stats to run the later detection.

Only requests about "text", and which are considered as pageview on our websites, are counted (the pageview categorization is out of the scope here. Let's say: it ensures that the request is something like a user opening a page on one of our Mediawiki).

GoogleWebLight Traffic is also excluded at this step for simplicity.

We keep 6 parameters (called features). For each active user session during the hour:

- First & last interactions with the website

- Number of pages seen

- Number of pages seen per minute

- Does the user use cookies

- Length of the user agent

- Number of distinct pages visited

Aggregate stats for the last 24 hours (Hive table features.actor_rollup_hourly)

Some user sessions traverse multiple hours. To get more realistic information about the session, we aggregate the data on a window containing the last 24 hours finishing on the computed hour. It's done in an HQL script.

Classify the pageviews_actor (Hive table predictions.actor_label_hourly)

An HQL script calculates hourly a label for each session using the feature values computed with data from the 24 hours prior. The label can be "user", "automated", or "unclassified".

The categorization is performed with a decision tree. The decisions are in this order in the HQL file:

- a mobile session with few pageviews is a user. (10 pageviews)

- a session with a lot of pageviews is a bot. (800 pageviews)

- a session with a high frequency of pageviews is a bot. (30 pageviews/minute)

- a session mainly without cookies high frequency of pageviews is a bot. (30 pageviews/minute)

- a session with too many requests without cookies and a small page_title variability is a bot.

- a session using a user agent too large or too small is a bot. (length out of 25-400 characters)

- a session with very low pageviews rare is a user. (~0 pageviews/minute)

Studies have been performed to evaluate the gain from using an ML model for classification in place of those heuristics. And the conclusions were that it doesn't worth the added complexity. Though, those heuristics and their values have been determined by experience AND inspiration from the results of the ML model (decision tree classifier).

Add the automated classification to webrequest (Hive table wmf.pageview_actor)

To create the pageview actor dataset, we:

- join webrequest with actor_label_hourly

- keep only pageviews or redirects to pageviews

- discard duplicated rows

Create other datasets

Other datasets using pageview actors:

- clickstream

- pageviews hourly

- ...

Read the traffic page for more details.

And look at the page about automated traffic correction in unique devices to see how it is used.

Effect of automated-traffic detection on Pageview metric - March 2020

The automated-traffic detection method changes the agent_type field of some pageviews from user to automated. Therefore the number of user pageviews will decrease when the mechanism will be deployed.

Effect on most-viewed pages list (top)

Where the bot detection changes are most visible is in any list that compiles top pageviews (top pageviews per per project or per project and country). There are two reasons why the bot detection code impacts these lists significantly: the first one is 'bot vandalism' the second one is 'bot spam'. 'Bot vandals' are bots whose only goal seems to add obscene, sexual or political content to the top pageview list for a given project. We had a recent instance of bot vandalism on Hungarian Wikipedia where a significant percentage of the pages on the top pageview list were just bogus titles. We use data such as the one for this event to verify the accuracy of our removal, in this particular incident was very effective. There have been other incidents of lesser volume in terms of pageviews where it is harder to detect that the traffic might be automated.

The second most common effect we see on top pageview lists is 'bot spam'. Some bots request a given page over and over with unknown intent, in some instances it seems the bot is trying to manipulate one of Wikipedias top pageview lists to gain popularity for a topic. See, for example, a recent example in German Wikipedia. Normally the bot spam is temporary and the page soon disappears from the top pageview list. However, there are pages with sustained automated traffic since years back. The page of Darth Vader top list for English Wikipedia is a good example of one of those.

Global Impact - All wikimedia projects

Over the month of March 2020 for all projects, the number of pageviews by agent_type is as follow:

| agent_type | sum(view_count) | % |

|---|---|---|

| user | 16202727427 | 71.55% |

| spider | 5188363262 | 22.91% |

| automated | 1253707204 | 5.54% |

For the 5.54% of traffic labelled as automated traffic, 84% of traffic is desktop, 16% is mobile web and less than half a percent is from the mobile app. This is consistent with the heuristics the community has been using to discard automated traffic when manually curating top pageview lists, it is been a few years that pages with a very high percentage of desktop-only traffic are no longer present on those lists.

The graphs below show that the number of traffic as automated is stable, also among access_method.

Totals

By access-method

See also a former research analysis about agents without cookies.

Impact per project

The impact per project depends on the size of projects.

- On big projects the volume of traffic flagged as

automatedis relatively regular, similar to the global one (see graphs below). - On smaller projects however the amount of so called automated-traffic is a lot less regular, with spikes or plateau periods. It is very interesting to notice that the removal of the

automatedtraffic from theuserbucket makes the new user-traffic not only somehow smaller but also a lot more stable in many cases (better split between signal and noise).

The number associated with each project is its rank in term of number of pageviews for the month of March 2020, user, spider and automated included.

Top 10 projects by number of views

en.wikipedia (1)

es.wikipedia (2)

ja.wikipedia (3)

de.wikipedia (4)

commons.wikimedia (5)

ru.wikipedia (6)

fr.wikipedia (7)

it.wikipedia (8)

zh.wikipedia (9)

pt.wikipedia (10)

5 random Smaller projects

gl.wikipedia (80)

en.wikivoyage (81)

wikisource (158)

de.wikiquote (259)

el.wikiquote (473)

Code

Because the detection of automated traffic happens after pageviews are refined the 'automated' marker on the column 'agent_type' is not present on the webrequest table, rather the "automated' marker will be present on the pageview_hourly table. So, records marked initially with agent_type='user' on webrequest might be marked at later time with agent_type='automated'. In order to know where a particular set of requests from as given actor in webrequest is marked as 'automated' it is necessary to calculate the actor signature. Predictions per actor signature are calculated daily. Sample code to calculate actor signatures looks as follows:

```|

-- Example 1: I am wondering about this IP and whether its traffic might be of automated nature

use wmf;

ADD JAR hdfs:///wmf/refinery/current/artifacts/refinery-hive-shaded.jar;

CREATE TEMPORARY FUNCTION get_actor_signature AS 'org.wikimedia.analytics.refinery.hive.GetActorSignatureUDF';

with questionable_requests as (

select

distinct get_actor_signature(ip, user_agent, accept_language, uri_host, uri_query, x_analytics_map) AS actor_signature

from webrequest

where

year=2020 and day=20 and hour=1 and month=4

and is_pageview=1

and pageview_info['project'] ='es.wikipedia'

and agent_type="user"

and IP='some'

limit 100

)

select

label,

label_reason, // this field has an explanation of why the label

AL.actor_signature

from questionable_requests QR join predictions.actor_label_hourly AL

on (AL.actor_signature = QR.actor_signature)

where

year=2020 and day=20 and hour=1 and month=4

-- Example2: For an hour of webrequest select just the traffic that is not labeled as automated use wmf; ADD JAR hdfs:///wmf/refinery/current/artifacts/refinery-hive-shaded.jar; CREATE TEMPORARY FUNCTION get_actor_signature AS 'org.wikimedia.analytics.refinery.hive.GetActorSignatureUDF';

with user_traffic as (

select actor_signature

from predictions.actor_label_hourly

where year=2020 and month=4 and day=20 and hour=1

and label="user"

)

select

pageview_info['page_title'],

get_actor_signature(ip, user_agent, accept_language, uri_host, uri_query, x_analytics_map) AS actor_signature

from webrequest W

join user_traffic UT on (UT.actor_signature = get_actor_signature(ip,user_agent,accept_language,uri_host,uri_query,x_analytics_map))

where

year=2020 and day=20 and hour=1 and month=4

and is_pageview=1

and pageview_info['project'] ='es.wikipedia'

and agent_type="user"

```